Spring Batch Tutorial

In this lesson, we will learn about Spring Batch. To make a running example of Spring Batch, we will make a quick example using Spring Boot and Maven. Let’s get started by adding appropriate dependency.

Maven Dependency

Add following maven dependency:

<dependencies>

<dependency>

<groupId>org.springframework.batch</groupId>

<artifactId>spring-batch-core</artifactId>

<version>3.0.7.RELEASE</version>

</dependency>

</dependencies>

Find latest maven dependency here.

Spring Batch

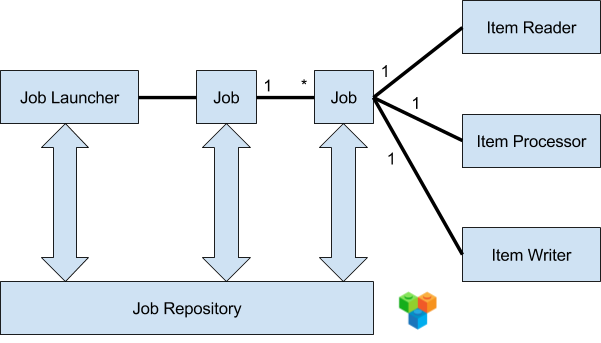

Spring Batch is a processing framework designed for robust and parallel execution of jobs. It follows the standard batch architecture where a job repository takes care of scheduling and interacting with the job.

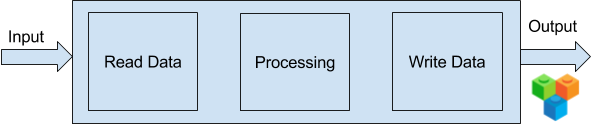

A job can have multiple steps—and each step will typically follow the sequence of reading data, processing it and then writing it.

Typically the framework will do most of the heavy lifting for us here using sqlite for the job repository. This is especially true when it comes to the low level persistence work of dealing with the jobs.

Batch Job

A batch job is a computer program or set of instructions processed as a batch. This means that it is a sequence of commands to be executed by the operating system which is listed in a file (often called a batch file, command file, or shell script) and submitted for execution as a single unit.

Let us try to visualize a job with a figure:

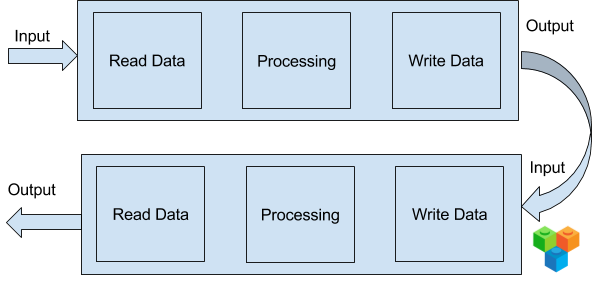

Mind that above image only visualizes a job, not a batch job. Let us try to put another image in place to visualize a batch job:

So, the above image is a better description of a batch job which includes multiple jobs. Only when all jobs are done can the batch be declared as done.

We have now defined the term batch job. Let’s find out why we should implement our batch jobs by using Spring Batch.

Problem with custom-written jobs

Many batch jobs written by people suffer the same problem just because they do not use any framework or library which makes them reinvent the wheel with which, errors also creeps in:

- The app that implements a batch job is lengthy. Because it has only one huge step, no one cannot really understand how the batch job works.

- The transaction in a job is Huge and thus, very slow.

- The batch job doesn’t have a real error handling. If an error occur during a batch job, the complete job fails. Though it is possible to write the error to a log file.

- The batch job doesn’t acknowledge its final state. In other words, there is no straightforward way to find out if the batch job has finished successfully.

Solution to above problems

We can (of course) fix everyone of these problems. If we decide to follow this approach, we face two new problems:

- We have to essentially create an in-house batch job framework and it is extremely hard to get everything right at the first time.

- Creating an in-house batch job framework is a large, daunting, and time consuming task. This means that we can’t fix any problems from our batch job framework’s initial version because we won’t have time for it.

Solution to everything: Spring Batch

Now, there’s no need to implement our own batch job framework since Spring Batch takes care of all of these problems. It provides the following features that help us to solve these problems:

- Spring helps us to maintain our code in a clean way by providing the infrastructure that is used to implement, configure, and run batch jobs.

- Using what’s known as called chunk oriented processing, items are processed one by one and the transaction is committed when the chunk size is met. In other words, it gives us a simple way to manage the size of our transactions.

- It provides proper error handling. For instance, we can skip items if an exception is thrown and configure retry logic that is used to determine whether our batch job should retry the failed operation. Further, we can configure the logic being used to decide whether or not the transaction will be rolled back.

- It writes enough log in the used database. This log contains the metadata of each job executed and step execution, and we can use it for debugging purposes. We can access this information by using a database client or a graphical admin user interface.

So now we see how Spring Batch can help us solve the problems of handwritten batch jobs. Let’s move on and take a quick look at the anatomy of a Spring Batch job.

How does a Spring Batch Job works?

A Spring Batch job consists of the following components:

- The Job represents the Spring Batch job. Each job can have one or more tasks.

- The Step represents an independent logical task (i.e. import information from an input file). Each step belongs to a single job.

- The ItemReader reads the input data and gives us the items that were found one by one. An ItemReader belongs to one step and each step must have only one ItemReader.

- The ItemProcessor transforms items into a form that is understood by the ItemWriter one item at a time. An ItemProcessor belongs to one step and each step can have one ItemProcessor.

- The ItemWriter writes information about an item to the output one item at a time. An ItemWriter belongs to one step and a step must have only one ItemWriter.

Example Use Case

Before starting, you can find the Spring Sample project here on Github.

We’ll be looking at an easy use case where we’ll be migrating data from a few financial transactions from the CSV format to XML.

The input file’s structure is straightforward and has one transaction per line. This line includes the username, id, the transaction date, and transaction amount:

|

username, user_id, transaction_date, transaction_amount liran, 2091, 21/10/1980, 90109 shubham, 3280, 13/02/1990, 87875 rabbit, 3234, 12/12/2000, 15239 |

Spring Batch Config

The first thing we’ll do is configure Spring Batch with Java:

public class SpringBatchConfig {

@Autowired

private JobBuilderFactory jobs;

@Autowired

private StepBuilderFactory steps;

@Value("input/items.csv")

private Resource itemsCsv;

@Value("file:xml/output.xml")

private Resource outputXml;

@Bean

public ItemReader<Transaction> itemReader()

throws UnexpectedInputException, ParseException {

FlatFileItemReader<Transaction> reader = new FlatFileItemReader<Transaction>();

DelimitedLineTokenizer tokenizer = new DelimitedLineTokenizer();

String[] tokens = { "username", "userid", "transactiondate", "amount" };

tokenizer.setNames(tokens);

reader.setResource(itemsCsv);

DefaultLineMapper<Transaction> lineMapper =

new DefaultLineMapper<Transaction>();

lineMapper.setLineTokenizer(tokenizer);

lineMapper.setFieldSetMapper(new RecordFieldSetMapper());

reader.setLineMapper(lineMapper);

return reader;

}

@Bean

public ItemProcessor<Transaction, Transaction> itemProcessor() {

return new CustomItemProcessor();

}

@Bean

public ItemWriter<Transaction> itemWriter(Marshaller marshaller)

throws MalformedURLException {

StaxEventItemWriter<Transaction> itemWriter =

new StaxEventItemWriter<Transaction>();

itemWriter.setMarshaller(marshaller);

itemWriter.setRootTagName("transactionRecord");

itemWriter.setResource(outputXml);

return itemWriter;

}

@Bean

public Marshaller marshaller() {

Jaxb2Marshaller marshaller = new Jaxb2Marshaller();

marshaller.setClassesToBeBound(new Class[] { Transaction.class });

return marshaller;

}

@Bean

protected Step step1(ItemReader<Transaction> reader,

ItemProcessor<Transaction, Transaction> processor,

ItemWriter<Transaction> writer) {

return steps.get("step1").<Transaction, Transaction> chunk(10)

.reader(reader).processor(processor).writer(writer).build();

}

@Bean(name = "firstBatchJob")

public Job job(@Qualifier("step1") Step step1) {

return jobs.get("firstBatchJob").start(step1).build();

}

}Spring Batch Job Config

Now let’s write our job description for the CSV to XML work:

public class BatchConfig {

@Value("org/springframework/batch/core/schema-drop-sqlite.sql")

private Resource dropReopsitoryTables;

@Value("org/springframework/batch/core/schema-sqlite.sql")

private Resource dataReopsitorySchema;

@Bean

public DataSource dataSource() {

DriverManagerDataSource dataSource = new DriverManagerDataSource();

dataSource.setDriverClassName("org.sqlite.JDBC");

dataSource.setUrl("jdbc:sqlite:repository.sqlite");

return dataSource;

}

@Bean

public DataSourceInitializer dataSourceInitializer(DataSource dataSource)

throws MalformedURLException {

ResourceDatabasePopulator databasePopulator =

new ResourceDatabasePopulator();

databasePopulator.addScript(dropReopsitoryTables);

databasePopulator.addScript(dataReopsitorySchema);

databasePopulator.setIgnoreFailedDrops(true);

DataSourceInitializer initializer = new DataSourceInitializer();

initializer.setDataSource(dataSource);

initializer.setDatabasePopulator(databasePopulator);

return initializer;

}

private JobRepository getJobRepository() throws Exception {

JobRepositoryFactoryBean factory = new JobRepositoryFactoryBean();

factory.setDataSource(dataSource());

factory.setTransactionManager(getTransactionManager());

factory.afterPropertiesSet();

return factory.getObject();

}

private PlatformTransactionManager getTransactionManager() {

return new ResourcelessTransactionManager();

}

public JobLauncher getJobLauncher() throws Exception {

SimpleJobLauncher jobLauncher = new SimpleJobLauncher();

jobLauncher.setJobRepository(getJobRepository());

jobLauncher.afterPropertiesSet();

return jobLauncher;

}

}

Read Data and Create Objects with ItemReader

First we configured the cvsFileItemReader which will read the data from the items.csv and convert it into the Transaction object:

@SuppressWarnings("restriction")

@XmlRootElement(name = "transactionRecord")

public class Transaction {

private String username;

private int userId;

private Date transactionDate;

private double amount;

/* getters and setters for the attributes */

}

To do so, it uses a custom mapper:

public class RecordFieldSetMapper implements FieldSetMapper<Transaction> {

public Transaction mapFieldSet(FieldSet fieldSet) {

SimpleDateFormat dateFormat = new SimpleDateFormat("dd/MM/yyyy");

Transaction transaction = new Transaction();

transaction.setUsername(fieldSet.readString("username"));

transaction.setUserId(fieldSet.readInt(1));

transaction.setAmount(fieldSet.readDouble(3));

String dateString = fieldSet.readString(2);

try {

transaction.setTransactionDate(dateFormat.parse(dateString));

} catch (ParseException e) {

e.printStackTrace();

}

return transaction;

}

}

Processing Data with ItemProcessor

Now we’ve made our own item processor call CustomItemProcessor. What it does is pass the original object that came from the reader over to the writer—it doesn’t process anything relating to the transaction object.

public class CustomItemProcessor implements ItemProcessor<Transaction, Transaction> {

public Transaction process(Transaction item) {

System.out.println("Processing..." + item);

return item;

}

}

Writing Objects to the FS with ItemWriter

Finally, we are going to store this transaction into an xml file located at xml/output.xml:

@Bean

public ItemWriter<Transaction> itemWriter(Marshaller marshaller)

throws MalformedURLException {

StaxEventItemWriter<Transaction> itemWriter =

new StaxEventItemWriter<Transaction>();

itemWriter.setMarshaller(marshaller);

itemWriter.setRootTagName("transactionRecord");

itemWriter.setResource(outputXml);

return itemWriter;

}

Configuring the Batch Job

So now all we have to do is to connect all the dots with a job.We’ll do this by using the batch:job syntax.

Take note of the commit-interval. This represents the number of transactions that will be kept in the memory before the batch is committed to the itemWriter. The transactions will be held in its memory until that point or until it encounters the end of the input data.

@Bean(name = "firstBatchJob")

public Job job(@Qualifier("step1") Step step1) {

return jobs.get("firstBatchJob").start(step1).build();

}

Running the Batch Job

That’s it – now let’s set up and run everything:

public class App {

public static void main(String[] args) {

// Spring Java config

AnnotationConfigApplicationContext context = new AnnotationConfigApplicationContext();

context.register(BatchConfig.class);

context.register(SpringBatchConfig.class);

context.refresh();

JobLauncher jobLauncher = (JobLauncher) context.getBean("jobLauncher");

Job job = (Job) context.getBean("firstBatchJob");

System.out.println("Starting the batch job");

try {

JobExecution execution = jobLauncher.run(job, new JobParameters());

System.out.println("Job Status : " + execution.getStatus());

System.out.println("Job completed");

} catch (Exception e) {

e.printStackTrace();

System.out.println("Job failed");

}

}

}

Summary

This lesson provides a basic idea of how to work with Spring Batch and how to use it in a very simple usecase. We tried to visualize the example first with the help of how batch jobs works and built an example around that.

All the source code use in this lesson can be found in this Github project. It should be easy to run.

Recent Stories

Top DiscoverSDK Experts

Compare Products

Select up to three two products to compare by clicking on the compare icon () of each product.

{{compareToolModel.Error}}

{{CommentsModel.TotalCount}} Comments

Your Comment